The United Indian

OpenAi founder Sam Altman in a recent post stated that “In 2025, we may see the first AI agents ‘join the workforce’ and materially change the output of companies.”

Artificial Intelligence (AI) has rapidly become an integral part of our lives, shaping industries and revolutionizing the way we live and work. However, as AI systems grow more sophisticated, two terms have taken center stage in discussions about its future: Artificial General Intelligence (AGI), ASI () and AI Safety Level (ASL). Let’s delve into these latest ai technology, their significance, and how they are poised to redefine our relationship with technology.

The AGI Revolution: What's Coming?

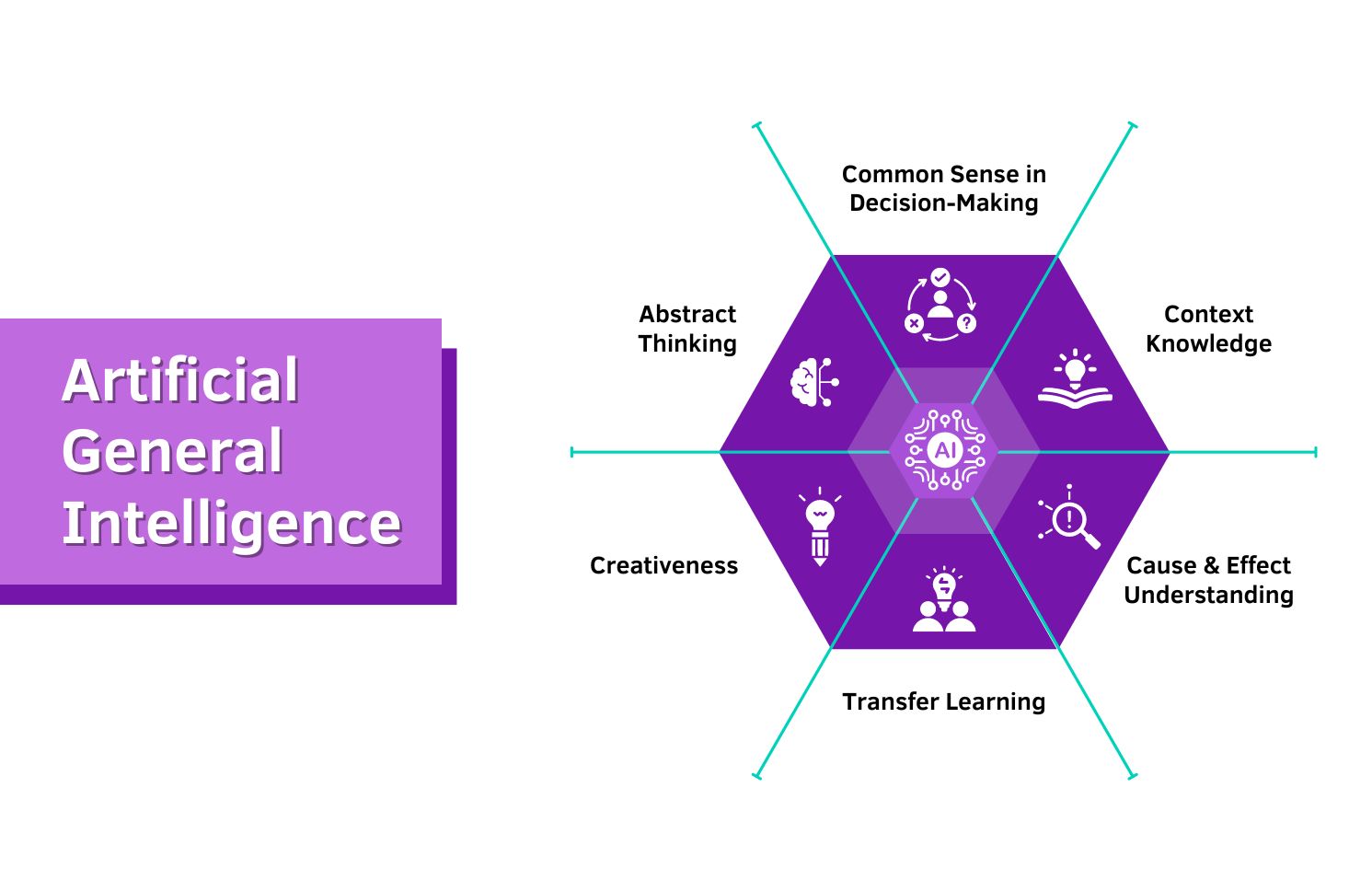

Imagine teaching a child. They learn one thing and can apply that knowledge to solve new, different problems. That's exactly what AGI aims to do – create AI systems that can think, learn, and adapt like humans. Unlike today's specialized AI that can only perform specific tasks (like ChatGPT for language or DALL-E for images), AGI would be able to understand and learn any intellectual task that a human can.

In simple terms, think of today's AI as a bunch of really smart specialists. You have AI that can play chess, write essays, or recognize faces – but each one can only do its specific job. Now, imagine an AI that can learn and do anything a human can, just like how we can learn to cook, drive, or solve math problems. That's AGI.

Unlike current AI systems that are great at specific tasks but struggle with anything outside their training, AGI would be able to:

- Learn new skills without specific programming

- Understand and apply knowledge across different fields

- Solve problems creatively, just like humans

- Improve itself without human intervention

According to recent surveys:

- 32% of AI researchers believe AGI will arrive within the next 10 years

- Another 37% predict it will emerge between 2033 and 2050

- Investment in AGI research has grown from $1 billion in 2015 to over $40 billion in 2023

- Economic forecasts suggest AGI could add $15.7 trillion to the global economy by 2030

- 73% of current jobs could be enhanced or transformed by AGI

Why AGI Matters ?

The development of AGI could be the biggest achievement in human history & its potential impact could be staggering. It could help us:

- Find cures for diseases we thought were incurable

- Solve complex environmental challenges

- Make scientific discoveries we haven't even dreamed of

- Revolutionize education and personal development

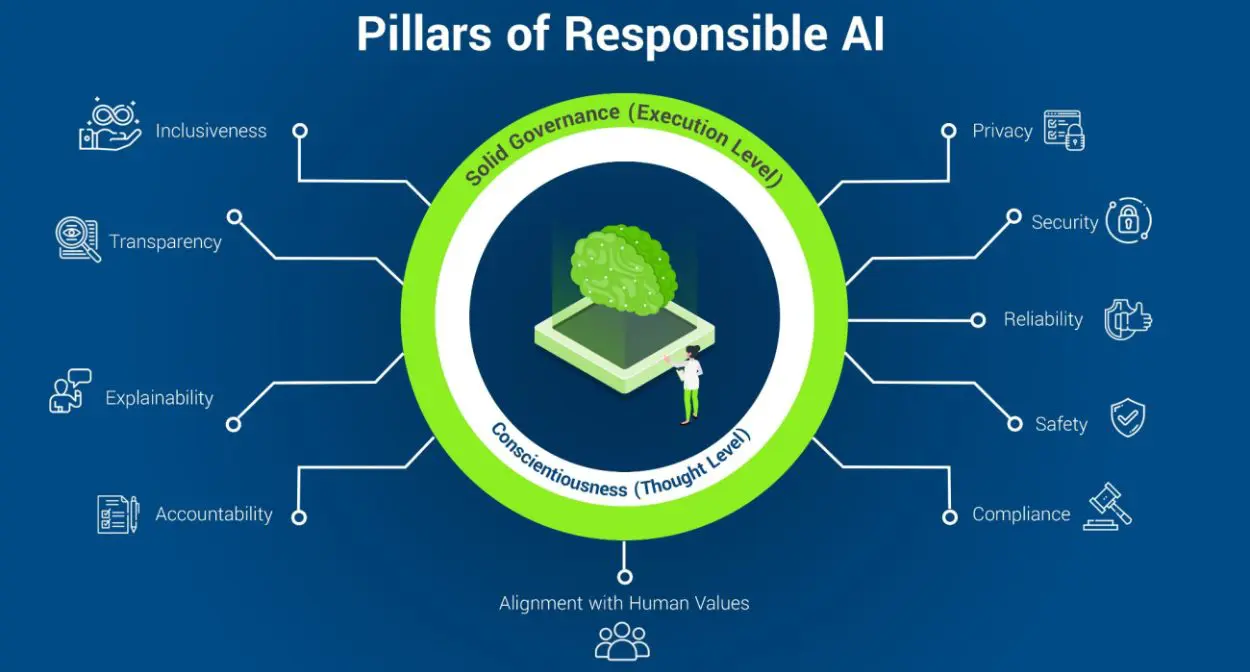

The Safety Challenge: Introducing ASL

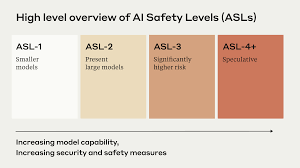

Recent research revealed that Only 14% of current AI systems meet Level 2 safety standards; but things are sure to change dramatically once we see advancements in ASL. But as AI systems become more powerful, ensuring their safety and ethical alignment becomes critical. This is where AI Safety Level (ASL) comes into play. Think of it as a safety rating system for AI, similar to how we rate earthquakes on the Richter scale or nuclear power plants on safety protocols. The ASL framework, developed by leading AI safety researchers, includes five levels:

ASL-1: AI models of this level are simpler, smaller, and carry less risk. Although there are some basic safety precautions in place, it's the beginning, and the technology itself is not really risky.

ASL-2: At this point, bigger, more intricate AI models are presented, mandating stricter safety measures to guarantee appropriate use. Although these models are capable to handle multiple tasks, they are still generally predictable and manageable.

ASL-3: As AI models get more potent at this level, it denotes a noticeably larger risk. Since this advanced technology can today solve complicated problems, it might present unexpected threats if misused or left unchecked. Thus more advanced safety and security procedures are necessary.

ASL-4+ (Speculative): At this level, AI technology moves into highly autonomous, speculative domain. At this stage, models may begin to behave autonomously, make judgments on their own, and even perhaps get around some safety precautions, posing intricate and unheard-of threats.

The relationship between the advances in AI technology like AGI is similar to a carefully choreographed dance. Every advance in AGI capabilities must be matched with corresponding safety measures. Currently the Investment in AI safety research is just 3% of total AI funding however, with growing understanding of importance of digital safety. With 89% of AI researchers agreeing that safety should be a bigger priority, there’s a lot that is happening in this direction.

ASI: The Promise and Peril of Superhuman Intelligence

Artificial Super Intelligence (ASI) is the stage of AI development where machines surpass human intelligence in all aspects, including reasoning, problem-solving, creativity, and emotional understanding. Unlike current AI systems, which excel at specific tasks (Artificial Narrow Intelligence) or mimic human-like thinking in general tasks (Artificial General Intelligence), ASI would possess the ability to outperform humans in virtually every domain.

The concept of ASI opens a world of immense possibilities. It could revolutionize healthcare by curing diseases once thought incurable, tackle global challenges like climate change, and drive technological innovations at an unprecedented pace. ASI could also automate complex decision-making, leading to more efficient and accurate outcomes in industries ranging from finance to space exploration.

However, the rise of ASI also comes with significant challenges and risks. A system with superintelligence could act unpredictably or in ways that conflict with human values if not properly controlled. Questions of safety, ethics, and alignment with human interests become crucial as ASI develops. Establishing frameworks like AI Safety Levels (ASL) is vital to ensure that such powerful systems remain beneficial and do not pose a threat to humanity.

While ASI is still a theoretical concept, its potential to reshape the future demands proactive research, regulation, and collaboration to ensure it serves as a tool for global progress rather than a source of unforeseen risks.

The Challenges We Face

Currently, since we are in early stages of these advances in AI, there are some of the challenges that the industry faces :

Speed vs. Safety

- AGI development is accelerating faster than safety measures

- Companies face pressure to release products quickly

- Safety regulations struggle to keep pace

Technical Hurdles

- Difficulty in defining and implementing human values

- Challenge of maintaining control over increasingly complex systems

- Balancing innovation with safety

Looking Ahead

With all the advances in AI, the future is exciting and full of potential. Experts predict the following key developments in the next few years:

- Mandatory ASL certification for advanced AI systems by 2026

- International AI safety standards by 2027

- Increased funding for safety research, projected to reach $10 billion annually by 2025

Conclusion

The development of AGI represents one of humanity's greatest achievements and challenges. As we stand on the brink of creating machines that could match or exceed human intelligence, through these advances in AI technology, ensuring proper safety measures isn't optional – it's essential for our future.

The good news? We're not starting from scratch. The ASL framework provides a roadmap for safe AI development. The challenge now is ensuring that safety keeps pace with advancement. After all, the goal isn't just to create intelligent machines, but to create beneficial ones that will help humanity thrive.

Remember: In the race between power and protection, the winner must be humanity. The future of AI isn't just about creating something powerful – it's about creating something wise.

Read more in Technology

Jul 01, 2025

TUI Staff

Jun 20, 2025

TUI Staff

Jun 04, 2025

TUI Staff

Jun 02, 2025

TUI Staff

Stay Tuned with The United Indian!

Our news blog is dedicated to sharing valuable and pertinent content for Indian citizens. Our blog news covering a wide range of categories including technology, environment, government & economy ensures that you stay informed about the topics that matter most. Follow The United Indian to never miss out on the latest trending news in India.

©The United Indian 2024