The United Indian

The world of artificial intelligence is evolving at a breakneck pace. With each passing day, we witness groundbreaking advancements that push the boundaries of what's possible. One such breakthrough is Llama 3.1, an open-source language model developed by Meta AI. This powerful tool has the potential to revolutionize various industries and applications.

Understanding Llama 3.1

Meta Llama 3.1 is a large language model (LLM) designed to process and generate human-like text. It belongs to the family of transformer-based models, a type of architecture that has proven highly effective in natural language processing tasks.

Model Architecture

At its core, Llama 3.1 employs a transformer architecture. This architecture consists of an encoder-decoder structure that excels at capturing long-range dependencies within text. The model processes input text sequentially, generating predictions for the next word based on the context it has learned from massive amounts of data.

Meta has incorporated several refinements to the standard transformer architecture in Llama 3.1. These enhancements contribute to the model's improved performance and efficiency. While specific details about these optimizations are limited, it's clear that Meta has invested significant effort in fine-tuning the model for optimal results.

The Llama System

Llama 3.1 is more than just a model; it's part of a comprehensive system. This system includes the underlying infrastructure, training data, and algorithms that work together to produce the impressive capabilities of the model.

One crucial aspect of the Llama system is the quality and diversity of the training data. A well-curated dataset is essential for any language model to learn accurate and informative patterns. Meta has reportedly used a vast corpus of text data to train Llama 3.1, enabling it to grasp a wide range of topics and styles.

Llama 3.1: A Deeper Dive into the Models

Meta's Llama 3.1 series comprises three primary models, differentiated by their parameter count: 8B, 70B, and 405B. Each model offers distinct capabilities and is tailored to specific use cases.

|

|

Llama 3.1 8B |

Llama 3.1 70B |

Llama 3.1 405B |

|

Parameter Count |

8 billion |

70 billion |

405 billion |

|

Characteristics |

|

|

|

|

Use Cases |

Ideal for mobile applications, edge devices, and proof-of-concept projects where computational resources are limited. |

Suitable for a wide range of applications including chatbots, content creation, and research. |

Ideal for demanding applications requiring advanced AI capabilities, such as scientific research, medical diagnosis, and complex problem-solving. |

Key Improvements in Llama 3.1

All three Meta Llama 3.1 models share several enhancements over their predecessors:

- Multilingualism: Significantly improved support for multiple languages, making them more versatile for global applications.

- Longer Context Length: The ability to process longer input sequences, enabling better understanding of complex topics and generating more coherent text.

- Enhanced Reasoning: Improved performance on tasks requiring logical reasoning and problem-solving.

- Tool Use: The capacity to interact with external tools, expanding the model's capabilities and potential applications.

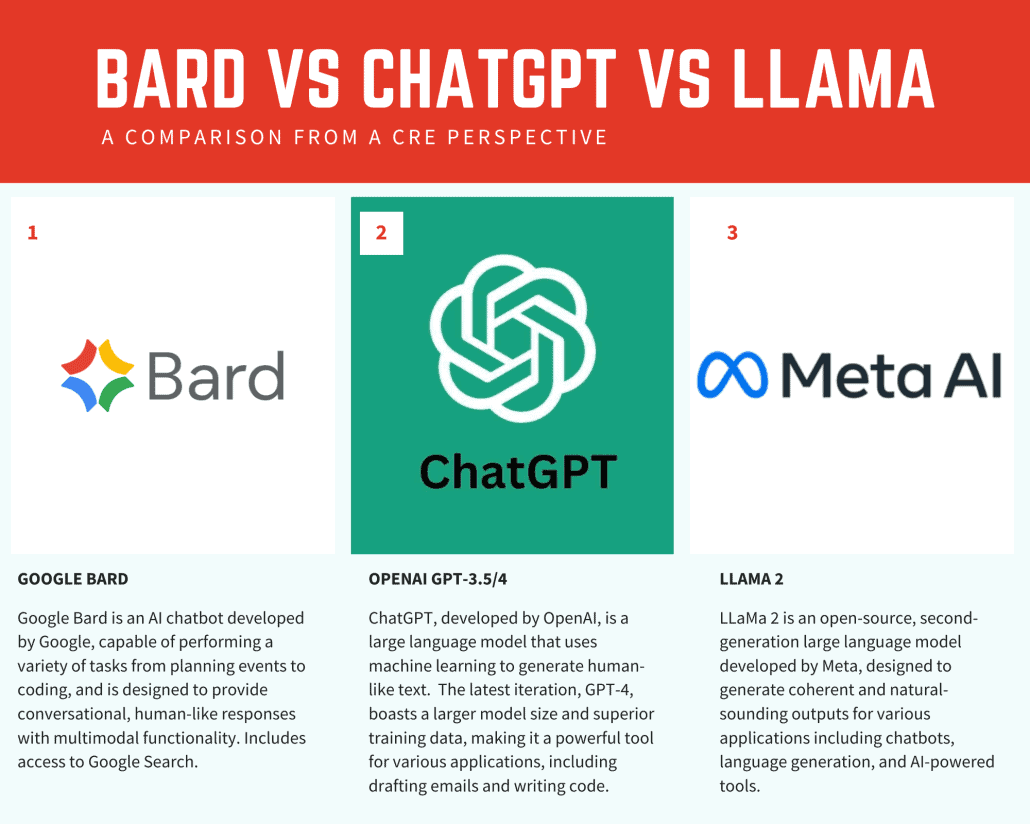

Comparison of AI Models: Llama 3.1, Nemotron, GPT-4

|

Feature |

Llama 3.1 |

Nemotron |

GPT-4 |

|

Type |

Large Language Model (LLM) |

Large Language Model (LLM) |

Large Language Model (LLM) |

|

Developer |

Meta AI |

Nemo Labs |

OpenAI |

|

Release Date |

September 2023 |

2023 |

March 2023 |

|

Open-Source |

Yes |

Yes |

No |

|

Model Size |

Varies (e.g., 7B, 13B, 33B, 70B parameters) |

Varies |

Varies |

|

Training Data |

Massive text and code corpus |

Details limited |

Massive text and code corpus |

|

Key Strengths |

Strong performance on reasoning tasks, code generation, and question answering |

Focus on efficiency and speed |

Exceptional performance across a wide range of tasks, including creative writing, code generation, and translation |

|

Weaknesses |

Can be prone to hallucinations and biases |

Limited public information available |

High computational cost, potential access restrictions |

|

Use Cases |

Research, chatbot development, content generation, code assistance |

Similar to Llama 3.1 |

Various applications including research, content creation, customer service, education

|

Benefits of Llama 3.1 Over Existing Models

Meta Llama 3.1 brings several advantages to the table that set it apart from many existing AI models:

- Open and Accessible: Unlike some proprietary models, Llama 3.1 is designed with openness in mind. This allows researchers and developers to study, modify, and build upon the model more freely.

- Efficiency and Scalability: Llama 3.1 is optimized for better performance with fewer computational resources, making it more practical to deploy in a variety of settings.

- Customizability: The Llama system's fine-tuning framework makes it easier to adapt the model for specific use cases, potentially outperforming more general models in specialized tasks.

- Ethical Considerations: Meta has placed a strong emphasis on developing Llama 3.1 with ethical AI principles in mind, addressing concerns about bias and responsible AI use.

- Continuous Improvement: As part of an active research project, Llama 3.1 benefits from ongoing improvements and updates based on the latest advancements in AI research.

- Multilingual Capabilities: While not exclusively focused on multilingual tasks, Llama 3.1 is designed to perform well across multiple languages, making it versatile for global applications.

- Potential for Multi-modal Extensions: The architecture of Llama 3.1 lays the groundwork for future extensions into multi-modal AI, potentially allowing it to work with text, images, and other data types seamlessly.

Real-World Applications of Llama 3.1

The capabilities of Meta Llama 3.1 open up a wide range of potential applications across various industries:

- Content Creation: Llama 3.1 can assist writers, marketers, and creators in generating high-quality text content, from articles and social media posts to creative stories.

- Customer Service: The model can power advanced chatbots and virtual assistants, providing more natural and context-aware responses to customer inquiries.

- Code Generation and Analysis: Developers can use Llama 3.1 to assist in writing code, debugging, and even explaining complex code structures.

- Education: Llama 3.1 can serve as an AI tutor, helping students understand complex topics and providing personalized learning experiences.

- Research and Data Analysis: The model's ability to process and synthesize large amounts of information makes it a valuable tool for researchers and data analysts.

- Language Translation: With its multilingual capabilities, Llama 3.1 can contribute to more accurate and context-aware language translation services.

- Healthcare: From assisting in medical research to helping with patient communication, Llama 3.1 has potential applications in various areas of healthcare.

Challenges and Future Directions

While Meta Llama 3.1 represents a significant step forward in AI language models, it's important to acknowledge the challenges and areas for future development:

- Ethical AI: Ensuring that the model behaves ethically and doesn't perpetuate biases remains an ongoing challenge.

- Factual Accuracy: Like all language models, Llama 3.1 can sometimes generate plausible-sounding but incorrect information. Improving factual accuracy is a key area of focus.

- Contextual Understanding: While advanced, the model's ability to truly understand context and maintain coherence over long conversations or documents still has room for improvement.

- Resource Requirements: Although more efficient than some competitors, running and fine-tuning Llama 3.1 still requires significant computational resources.

- Integration Challenges: Making the model easily integrable into existing systems and workflows presents both technical and practical challenges.

Looking ahead, we can expect to see continued improvements in Llama 3.1 and its successors. Areas of future development might include enhanced multi-modal capabilities, improved efficiency, and more sophisticated fine-tuning techniques.

Conclusion

Meta Llama 3.1 represents an exciting step forward in the world of AI language models. With its advanced architecture, efficiency improvements, and focus on accessibility, it has the potential to drive innovations across a wide range of applications. While it faces competition from other powerful models like GPT-4 and Nemotron, Llama 3.1's unique approach and the support of Meta's resources and community give it distinct advantages.

As AI continues to evolve at a rapid pace, models like Llama 3.1 are pushing the boundaries of what's possible in natural language processing and generation. Whether you're a researcher, developer, or simply someone interested in the future of AI, Llama 3.1 is certainly a model to watch. Its impact on fields ranging from content creation to scientific research could be profound, ushering in new possibilities for human-AI collaboration and problem-solving.

Read more in Technology

Jul 10, 2025

TUI Staff

Jul 01, 2025

TUI Staff

Stay Tuned with The United Indian!

Our news blog is dedicated to sharing valuable and pertinent content for Indian citizens. Our blog news covering a wide range of categories including technology, environment, government & economy ensures that you stay informed about the topics that matter most. Follow The United Indian to never miss out on the latest trending news in India.

©The United Indian 2024